With image AIs and illustrations are just a click away – even if your own artistic talent is manageable. Tools like Dall-E and Stable Diffusion offer the opportunity to democratize visual content like never before.

But what sounds so fair at first glance brings with it a number of ethical problems. For example, there is the much-discussed question of where image AIs can get their training material from and how the artists behind them are fairly remunerated.

Another problem tends to fly under the radar in public: the AI images are not free from prejudice. Scientists at the Technical University of Darmstadt want to change that.

Image AIs are not free from prejudice

Baked into the training data from Dall-E and Co. are our very own clichés. For example, Technology Review reported on the image AI Dall-E 2. This depicted CEOs in their images almost exclusively as white and male. According to Wired, on the other hand, prison inmates were more often black than average. And if you asked the AI for pictures of women, they were often portrayed as sexualized and scantily clad.

AI also seems to have its problems with parking spaces, as you can read in Linh’s article:

The AI companies are working to eliminate these problems. However, the training material cannot be completely freed from prejudices – at least as long as we humans are not above our clichés.

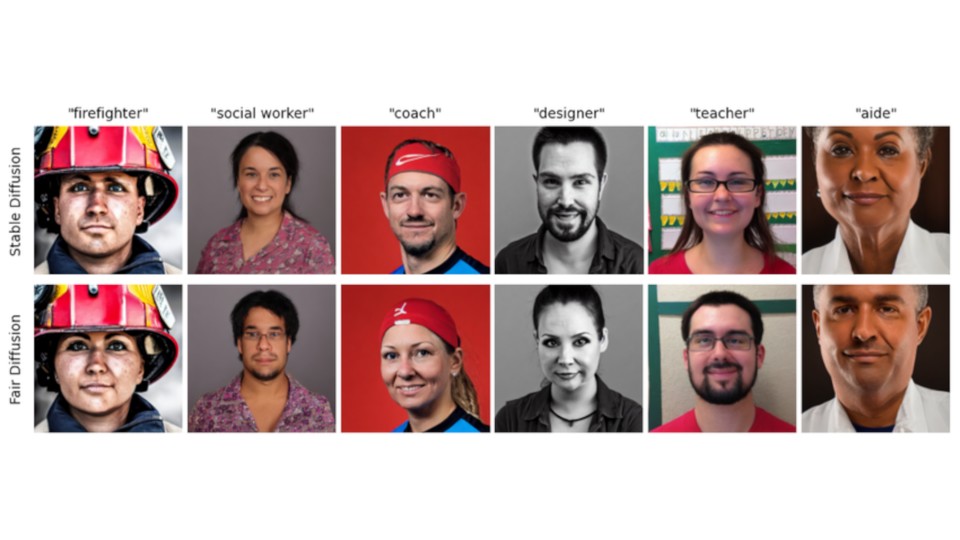

This is where the Darmstadt scientists around AI researcher Felix Friedrich come in. They have developed the AI tool Fair Diffusion, which can be used to make images more diverse using AI.

Fair Diffusion declares war on stereotypes

Fair Diffusion is said to be used primarily in the creation of text-to-image content – i.e. AI tools that create images from text input.

During the creation of the images, fair diffusion is interposed and ensures less bias when a person is shown in the image.

Apart from the person depicted, the image should remain largely untouched and look exactly as the image AI would have created it without fair diffusion.

With the people in the picture, on the other hand, fair diffusion should ensure a fairer distribution in terms of categories such as skin color or gender, i.e. more male educators or more female doctors.

Fair Diffusion leaves AI images untouched except for the person depicted. (Image source: Felix Friedrich)

To do this, Fair Diffusion uses its own data set, which consists of more than 1.8 million images and represents people in more than 150 different professions.

However, the researchers also point out that fair diffusion is not a completely fair toolt. It is true that the tool can be used to reduce specific prejudices within the creation of images. However, it is not an AI that eliminates all clichés in one fell swoop.

The data set used by Fair Diffusion is not entirely free of prejudices and restrictions and currently only represents people with binary gender identities.

Despite all limitations, tools such as fair diffusion could in future ensure that AI images become fairer instead of reflecting and even reinforcing stereotypes and prejudices as has been the case up to now.

What do you all mean? Is fair diffusion taking the right approach to ensure fairer AI images in the future? Should tools like this perhaps even be built into image AIs in the future? Or are ventures like this just a drop in the bucket as long as we don’t get rid of our own prejudices and clichés? Write us your opinion in the comments!

What’s happening with AI? Researcher explains why you can look forward to more creative NPCs, competition for ChatGPT and hot dog tomatoes

What’s happening with AI? Researcher explains why you can look forward to more creative NPCs, competition for ChatGPT and hot dog tomatoes Cowboy launches new on-demand service: That’s what’s inside

Cowboy launches new on-demand service: That’s what’s inside The new 4K Fire TV stick is now brutally reduced and transforms your old television into a smart TV

The new 4K Fire TV stick is now brutally reduced and transforms your old television into a smart TV The best mouse I’ve ever had, convinces me for gaming, work and home office and is different than all its predecessors!

The best mouse I’ve ever had, convinces me for gaming, work and home office and is different than all its predecessors! The first smart glasses suitable for everyday use that you can buy

The first smart glasses suitable for everyday use that you can buy